Python如何通过Scrapy框架实现爬取百度新冠疫情数据

Python如何通过Scrapy框架实现爬取百度新冠疫情数据

这篇文章主要介绍了Python如何通过Scrapy框架实现爬取百度新冠疫情数据,具有一定借鉴价值,感兴趣的朋友可以参考下,希望大家阅读完这篇文章之后大有收获,下面让小编带着大家一起了解一下。

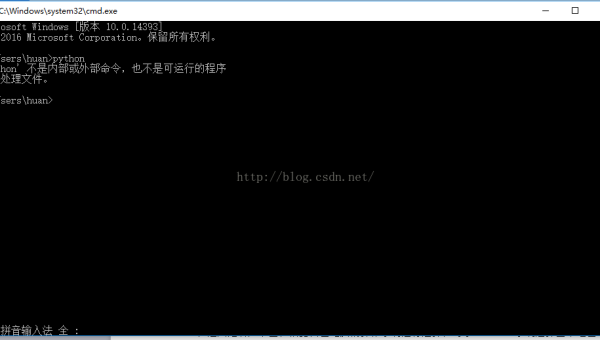

环境部署

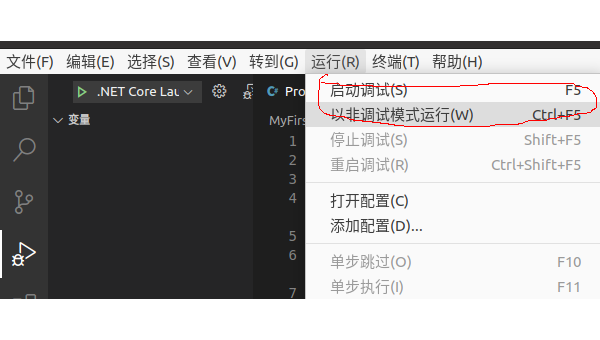

主要简单推荐一下

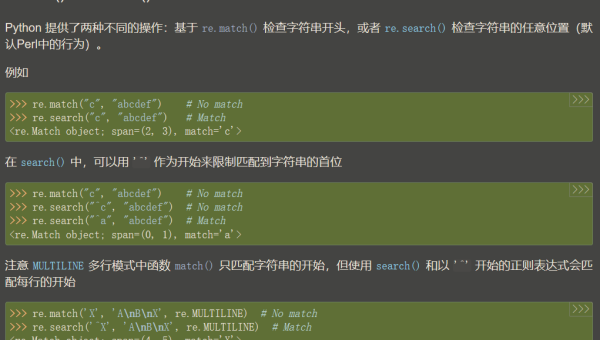

插件推荐

这里先推荐一个Google Chrome的扩展插件xpath helper,可以验证xpath语法是不是正确。

爬虫目标

需要爬取的页面:实时更新:新型冠状病毒肺炎疫情地图

主要爬取的目标选取了全国的数据以及各个身份的数据。

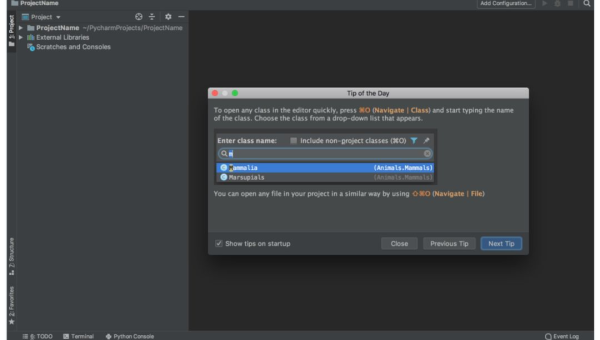

项目创建

使用scrapy命令创建项目

scrapystartprojectyqsj

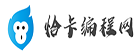

webdriver部署

这里就不重新讲一遍了,可以参考我这篇文章的部署方法:Python 详解通过Scrapy框架实现爬取CSDN全站热榜标题热词流程

项目代码

开始撸代码,看一下百度疫情省份数据的问题。

页面需要点击展开全部span。所以在提取页面源码的时候需要模拟浏览器打开后,点击该按钮。所以按照这个方向,我们一步步来。

Item定义

定义两个类YqsjProvinceItem和YqsjChinaItem,分别定义国内省份数据和国内数据。

#Defineherethemodelsforyourscrapeditems##Seedocumentationin:#https://docs.scrapy.org/en/latest/topics/items.htmlimportscrapyclassYqsjProvinceItem(scrapy.Item):#definethefieldsforyouritemherelike:#name=scrapy.Field()location=scrapy.Field()new=scrapy.Field()exist=scrapy.Field()total=scrapy.Field()cure=scrapy.Field()dead=scrapy.Field()classYqsjChinaItem(scrapy.Item):#definethefieldsforyouritemherelike:#name=scrapy.Field()#现有确诊exist_diagnosis=scrapy.Field()#无症状asymptomatic=scrapy.Field()#现有疑似exist_suspecte=scrapy.Field()#现有重症exist_severe=scrapy.Field()#累计确诊cumulative_diagnosis=scrapy.Field()#境外输入overseas_input=scrapy.Field()#累计治愈cumulative_cure=scrapy.Field()#累计死亡cumulative_dead=scrapy.Field()

中间件定义

需要打开页面后点击一下展开全部。

完整代码

#Defineherethemodelsforyourspidermiddleware##Seedocumentationin:#https://docs.scrapy.org/en/latest/topics/spider-middleware.htmlfromscrapyimportsignals#usefulforhandlingdifferentitemtypeswithasingleinterfacefromitemadapterimportis_item,ItemAdapterfromscrapy.httpimportHtmlResponsefromselenium.common.exceptionsimportTimeoutExceptionfromselenium.webdriverimportActionChainsimporttimeclassYqsjSpiderMiddleware:#Notallmethodsneedtobedefined.Ifamethodisnotdefined,#scrapyactsasifthespidermiddlewaredoesnotmodifythe#passedobjects.@classmethoddeffrom_crawler(cls,crawler):#ThismethodisusedbyScrapytocreateyourspiders.s=cls()crawler.signals.connect(s.spider_opened,signal=signals.spider_opened)returnsdefprocess_spider_input(self,response,spider):#Calledforeachresponsethatgoesthroughthespider#middlewareandintothespider.#ShouldreturnNoneorraiseanexception.returnNonedefprocess_spider_output(self,response,result,spider):#CalledwiththeresultsreturnedfromtheSpider,after#ithasprocessedtheresponse.#MustreturnaniterableofRequest,oritemobjects.foriinresult:yieldidefprocess_spider_exception(self,response,exception,spider):#Calledwhenaspiderorprocess_spider_input()method#(fromotherspidermiddleware)raisesanexception.#ShouldreturneitherNoneoraniterableofRequestoritemobjects.passdefprocess_start_requests(self,start_requests,spider):#Calledwiththestartrequestsofthespider,andworks#similarlytotheprocess_spider_output()method,except#thatitdoesn'thavearesponseassociated.#Mustreturnonlyrequests(notitems).forrinstart_requests:yieldrdefspider_opened(self,spider):spider.logger.info('Spideropened:%s'%spider.name)classYqsjDownloaderMiddleware:#Notallmethodsneedtobedefined.Ifamethodisnotdefined,#scrapyactsasifthedownloadermiddlewaredoesnotmodifythe#passedobjects.@classmethoddeffrom_crawler(cls,crawler):#ThismethodisusedbyScrapytocreateyourspiders.s=cls()crawler.signals.connect(s.spider_opened,signal=signals.spider_opened)returnsdefprocess_request(self,request,spider):#Calledforeachrequestthatgoesthroughthedownloader#middleware.#Musteither:#-returnNone:continueprocessingthisrequest#-orreturnaResponseobject#-orreturnaRequestobject#-orraiseIgnoreRequest:process_exception()methodsof#installeddownloadermiddlewarewillbecalled#returnNonetry:spider.browser.get(request.url)spider.browser.maximize_window()time.sleep(2)spider.browser.find_element_by_xpath("//*[@id='nationTable']/div/span").click()#ActionChains(spider.browser).click(searchButtonElement)time.sleep(5)returnHtmlResponse(url=spider.browser.current_url,body=spider.browser.page_source,encoding="utf-8",request=request)exceptTimeoutExceptionase:print('超时异常:{}'.format(e))spider.browser.execute_script('window.stop()')finally:spider.browser.close()defprocess_response(self,request,response,spider):#Calledwiththeresponsereturnedfromthedownloader.#Musteither;#-returnaResponseobject#-returnaRequestobject#-orraiseIgnoreRequestreturnresponsedefprocess_exception(self,request,exception,spider):#Calledwhenadownloadhandleroraprocess_request()#(fromotherdownloadermiddleware)raisesanexception.#Musteither:#-returnNone:continueprocessingthisexception#-returnaResponseobject:stopsprocess_exception()chain#-returnaRequestobject:stopsprocess_exception()chainpassdefspider_opened(self,spider):spider.logger.info('Spideropened:%s'%spider.name)

定义爬虫

分别获取国内疫情数据以及省份疫情数据。完整代码:

#!/usr/bin/envpython#-*-coding:utf-8-*-#@Time:2021/11/722:05#@Author:至尊宝#@Site:#@File:baidu_yq.pyimportscrapyfromseleniumimportwebdriverfromselenium.webdriver.chrome.optionsimportOptionsfromyqsj.itemsimportYqsjChinaItem,YqsjProvinceItemclassYqsjSpider(scrapy.Spider):name='yqsj'#allowed_domains=['blog.csdn.net']start_urls=['https://voice.baidu.com/act/newpneumonia/newpneumonia#tab0']china_xpath="//div[contains(@class,'VirusSummarySix_1-1-317_2ZJJBJ')]/text()"province_xpath="//*[@id='nationTable']/table/tbody/tr[{}]/td/text()"province_xpath_1="//*[@id='nationTable']/table/tbody/tr[{}]/td/div/span/text()"def__init__(self):chrome_options=Options()chrome_options.add_argument('--headless')#使用无头谷歌浏览器模式chrome_options.add_argument('--disable-gpu')chrome_options.add_argument('--no-sandbox')self.browser=webdriver.Chrome(chrome_options=chrome_options,executable_path="E:\\chromedriver_win32\\chromedriver.exe")self.browser.set_page_load_timeout(30)defparse(self,response,**kwargs):country_info=response.xpath(self.china_xpath)yq_china=YqsjChinaItem()yq_china['exist_diagnosis']=country_info[0].get()yq_china['asymptomatic']=country_info[1].get()yq_china['exist_suspecte']=country_info[2].get()yq_china['exist_severe']=country_info[3].get()yq_china['cumulative_diagnosis']=country_info[4].get()yq_china['overseas_input']=country_info[5].get()yq_china['cumulative_cure']=country_info[6].get()yq_china['cumulative_dead']=country_info[7].get()yieldyq_china#遍历35个地区forxinrange(1,35):path=self.province_xpath.format(x)path2=self.province_xpath_1.format(x)province_info=response.xpath(path)province_name=response.xpath(path2)yq_province=YqsjProvinceItem()yq_province['location']=province_name.get()yq_province['new']=province_info[0].get()yq_province['exist']=province_info[1].get()yq_province['total']=province_info[2].get()yq_province['cure']=province_info[3].get()yq_province['dead']=province_info[4].get()yieldyq_province

pipeline输出结果文本

将结果按照一定的文本格式输出出来。完整代码:

#Defineyouritempipelineshere##Don'tforgettoaddyourpipelinetotheITEM_PIPELINESsetting#See:https://docs.scrapy.org/en/latest/topics/item-pipeline.html#usefulforhandlingdifferentitemtypeswithasingleinterfacefromitemadapterimportItemAdapterfromyqsj.itemsimportYqsjChinaItem,YqsjProvinceItemclassYqsjPipeline:def__init__(self):self.file=open('result.txt','w',encoding='utf-8')defprocess_item(self,item,spider):ifisinstance(item,YqsjChinaItem):self.file.write("国内疫情\n现有确诊\t{}\n无症状\t{}\n现有疑似\t{}\n现有重症\t{}\n累计确诊\t{}\n境外输入\t{}\n累计治愈\t{}\n累计死亡\t{}\n".format(item['exist_diagnosis'],item['asymptomatic'],item['exist_suspecte'],item['exist_severe'],item['cumulative_diagnosis'],item['overseas_input'],item['cumulative_cure'],item['cumulative_dead']))ifisinstance(item,YqsjProvinceItem):self.file.write("省份:{}\t新增:{}\t现有:{}\t累计:{}\t治愈:{}\t死亡:{}\n".format(item['location'],item['new'],item['exist'],item['total'],item['cure'],item['dead']))returnitemdefclose_spider(self,spider):self.file.close()

配置文件改动

直接参考,自行调整:

#Scrapysettingsforyqsjproject##Forsimplicity,thisfilecontainsonlysettingsconsideredimportantor#commonlyused.Youcanfindmoresettingsconsultingthedocumentation:##https://docs.scrapy.org/en/latest/topics/settings.html#https://docs.scrapy.org/en/latest/topics/downloader-middleware.html#https://docs.scrapy.org/en/latest/topics/spider-middleware.htmlBOT_NAME='yqsj'SPIDER_MODULES=['yqsj.spiders']NEWSPIDER_MODULE='yqsj.spiders'#Crawlresponsiblybyidentifyingyourself(andyourwebsite)ontheuser-agent#USER_AGENT='yqsj(+http://www.yourdomain.com)'USER_AGENT='Mozilla/5.0'#Obeyrobots.txtrulesROBOTSTXT_OBEY=False#ConfiguremaximumconcurrentrequestsperformedbyScrapy(default:16)#CONCURRENT_REQUESTS=32#Configureadelayforrequestsforthesamewebsite(default:0)#Seehttps://docs.scrapy.org/en/latest/topics/settings.html#download-delay#Seealsoautothrottlesettingsanddocs#DOWNLOAD_DELAY=3#Thedownloaddelaysettingwillhonoronlyoneof:#CONCURRENT_REQUESTS_PER_DOMAIN=16#CONCURRENT_REQUESTS_PER_IP=16#Disablecookies(enabledbydefault)COOKIES_ENABLED=False#DisableTelnetConsole(enabledbydefault)#TELNETCONSOLE_ENABLED=False#Overridethedefaultrequestheaders:DEFAULT_REQUEST_HEADERS={'Accept':'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8','Accept-Language':'en','User-Agent':'Mozilla/5.0(WindowsNT6.2;WOW64)AppleWebKit/537.36(KHTML,likeGecko)Chrome/27.0.1453.94Safari/537.36'}#Enableordisablespidermiddlewares#Seehttps://docs.scrapy.org/en/latest/topics/spider-middleware.htmlSPIDER_MIDDLEWARES={'yqsj.middlewares.YqsjSpiderMiddleware':543,}#Enableordisabledownloadermiddlewares#Seehttps://docs.scrapy.org/en/latest/topics/downloader-middleware.htmlDOWNLOADER_MIDDLEWARES={'yqsj.middlewares.YqsjDownloaderMiddleware':543,}#Enableordisableextensions#Seehttps://docs.scrapy.org/en/latest/topics/extensions.html#EXTENSIONS={#'scrapy.extensions.telnet.TelnetConsole':None,#}#Configureitempipelines#Seehttps://docs.scrapy.org/en/latest/topics/item-pipeline.htmlITEM_PIPELINES={'yqsj.pipelines.YqsjPipeline':300,}#EnableandconfiguretheAutoThrottleextension(disabledbydefault)#Seehttps://docs.scrapy.org/en/latest/topics/autothrottle.html#AUTOTHROTTLE_ENABLED=True#Theinitialdownloaddelay#AUTOTHROTTLE_START_DELAY=5#Themaximumdownloaddelaytobesetincaseofhighlatencies#AUTOTHROTTLE_MAX_DELAY=60#TheaveragenumberofrequestsScrapyshouldbesendinginparallelto#eachremoteserver#AUTOTHROTTLE_TARGET_CONCURRENCY=1.0#Enableshowingthrottlingstatsforeveryresponsereceived:#AUTOTHROTTLE_DEBUG=False#EnableandconfigureHTTPcaching(disabledbydefault)#Seehttps://docs.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings#HTTPCACHE_ENABLED=True#HTTPCACHE_EXPIRATION_SECS=0#HTTPCACHE_DIR='httpcache'#HTTPCACHE_IGNORE_HTTP_CODES=[]#HTTPCACHE_STORAGE='scrapy.extensions.httpcache.FilesystemCacheStorage'

验证结果

看看结果文件

感谢你能够认真阅读完这篇文章,希望小编分享的“Python如何通过Scrapy框架实现爬取百度新冠疫情数据”这篇文章对大家有帮助,同时也希望大家多多支持恰卡编程网,关注恰卡编程网行业资讯频道,更多相关知识等着你来学习!

推荐阅读

-

Python 3.12 新特性解析:模式匹配增强与性能优化实战

-

Lightly IDE 深度评测:轻量级 Python 开发工具是否适合团队协作?

-

VS Code 自定义配置:JSON 文件修改、代码片段与任务自动化脚本

-

Python 虚拟环境选择:venv、conda、poetry 的适用场景对比

-

PyCharm+GitHub Copilot:Python 开发中 AI 辅助编码的最佳实践

-

PyCharm 无法识别虚拟环境?5 步排查 Python 解释器配置问题

-

数据科学工具链:Jupyter Notebook+RStudio+Python 的协同工作流

-

Python 3.12 新特性:模式匹配增强与性能改进实战

-

Lightly IDE 适合谁?轻量级 Python 开发工具深度评测

-

Python IDE 终极对比:PyCharm vs VS Code vs Jupyter Notebook