Spark SQL怎么用

Spark SQL怎么用

这篇文章主要介绍“Spark SQL怎么用”,在日常操作中,相信很多人在Spark SQL怎么用问题上存在疑惑,小编查阅了各式资料,整理出简单好用的操作方法,希望对大家解答”Spark SQL怎么用”的疑惑有所帮助!接下来,请跟着小编一起来学习吧!

pom.xml

<dependencies>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_2.10</artifactId>

<version>2.1.0</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-sql_2.10</artifactId>

<version>2.1.0</version>

</dependency>

</dependencies>

Java:

import java.io.Serializable;

import java.util.Arrays;

import org.apache.spark.SparkConf;

import org.apache.spark.api.java.JavaRDD;

import org.apache.spark.api.java.JavaSparkContext;

import org.apache.spark.sql.Dataset;

import org.apache.spark.sql.Row;

import org.apache.spark.sql.SQLContext;

import org.apache.spark.sql.SparkSession;

public class SparkSqlTest {

public static class Person implements Serializable {

private static final long serialVersionUID = -6259413972682177507L;

private String name;

private int age;

public Person(String name, int age) {

this.name = name;

this.age = age;

}

public String toString() {

return name + ": " + age;

}

public String getName() {

return name;

}

public void setName(String name) {

this.name = name;

}

public int getAge() {

return age;

}

public void setAge(int age) {

this.age = age;

}

}

public static void main(String[] args) {

SparkConf conf = new SparkConf().setAppName("Test").setMaster("local");

JavaSparkContext sc = new JavaSparkContext(conf);

SparkSession spark = SparkSession.builder().appName("Test").getOrCreate();

JavaRDD<String> input = sc.parallelize(Arrays.asList("abc,1", "test,2"));

JavaRDD<Person> persons = input.map(s -> s.split(",")).map(s -> new Person(s[0], Integer.parseInt(s[1])));

//[abc: 1, test: 2]

System.out.println(persons.collect());

Dataset<Row> df = spark.createDataFrame(persons, Person.class);

/*

+---+----+

|age|name|

+---+----+

| 1| abc|

| 2|test|

+---+----+

*/

df.show();

/*

root

|-- age: integer (nullable = false)

|-- name: string (nullable = true)

*/

df.printSchema();

SQLContext sql = new SQLContext(spark);

sql.registerDataFrameAsTable(df, "person");

/*

+---+----+

|age|name|

+---+----+

| 2|test|

+---+----+

*/

sql.sql("SELECT * FROM person WHERE age>1").show();

sc.close();

}

}

到此,关于“Spark SQL怎么用”的学习就结束了,希望能够解决大家的疑惑。理论与实践的搭配能更好的帮助大家学习,快去试试吧!若想继续学习更多相关知识,请继续关注恰卡编程网网站,小编会继续努力为大家带来更多实用的文章!

推荐阅读

-

实用microsoft(sql server 7 教程 怎么配置sqlserver的远程连接)

怎么配置sqlserver的远程连接?SQLServer2008默认是不愿意远程桌面的,如果不是想在本地用SSMS连接远战服务器...

-

如何使用 SQL Server FILESTREAM 存储非结构化数据?

-

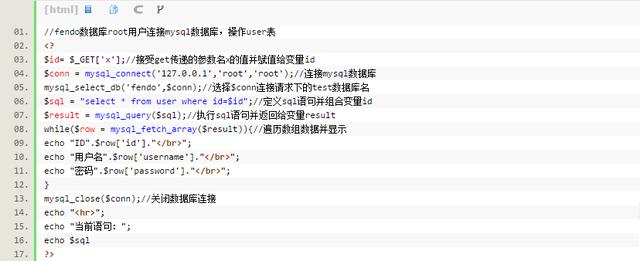

安全攻防六:SQL注入,明明设置了强密码,为什么还会被别人登录

-

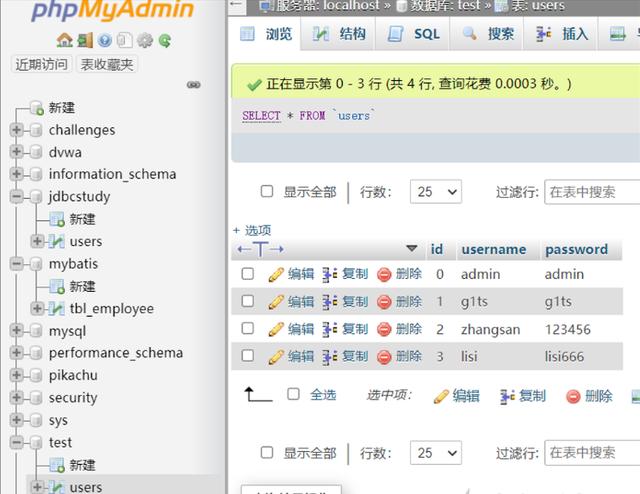

利用PHP访问MySql数据库以及增删改查实例操作

关于利用PHP访问MySql数据库的逻辑操作以及增删改查实例操作PHP访问MySql数据库˂?php//造连...

-

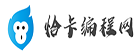

SQL注入速查表

-

「Web安全」SQL注入的基石

-

每个互联网人才都应该知道的SQL注入

-

MySQL中防止SQL注入

喜欢本文章请关注点赞加转发如何保护数据免受SQL注入攻击?采取措施保护数据免受基于应用程序的攻击,例如SQL注入。千...

-

mybatis中如何防止sql注入和传参

-

SQL注入之环境搭建(二)-PHP+Mysql注入环境搭建